- Answer. Using the second method, each letter could be one of (26 + 26) different characters. The hacker has to check 526 combinations which is vastly more. Mixing uppercase and lowercase letters and including numbers and special symbols makes a password much more difficult to hack.

|

Algorithm efficiency Learning objectives: 11.5.2.6 to understand the time-efficiency of algorithms Definition

Let's consider two algorithms, which both calculate the sum of the first n integers. Which algorithm is more efficient? Why?

Time efficiency of the algorithm Algorithms may be compared on how much time they need to solve a particular problem. The goal is to design algorithms that will run quickly. In order to compare the efficiency of different algorithms in terms of execution time, we need to quantify the number of basic operations or steps that the algorithm will need, in terms of the number of items to be processed.

Big O

Big-O establishes a worst-case run time. Another mathematical concept used by Big-O is permutations. Permutation relates to the act of arranging all the members of a set into order (described by factorial - meaning that if we need to put N members in order this can be done with N! ways).

O(1) (Constant time) O(1) describes an algorithm that takes constant time (the same amount of time) to execute regardless of the size of the input data set. Suppose array a has n items. The statement

O(log n) (Logarithmic time) The time taken to execute an algorithm of order O(log n) (logarithmic time) will grow very slowly as the size of the data set increases. Doubling the size of the data set has very little effect on the time the algorithm takes to complete. (Note that in Big-O notation the base of the logarithm, 2 in this case, is not specified because it is irrelevant to the time complexity, being a constant factor.) O(n) (Linear time) O(n) describes an algorithm whose performance will grow in linear time, in direct proportion to the size of the data set. For example, a linear search of an array of 1000 unsorted items will take 1000 times longer than searching an array of 1 item.

How many comparisons in the worst case? O(n2) (Polynomial time) O(n2) describes an algorithm whose performance is directly proportional to the square of the size of the data set. A program with two nested loops each performed n times will typically have an order of time complexity O(n2). The running time of the algorithm grows in polynomial time. O(2n) (Exponential time) O(2n) describes an algorithm where the execution time will double with every additional item added to the data set. The execution time grows exponentially and quickly becomes very large. O(n!) (Factorial time) The time taken to execute an algorithm of order O(n!) will grow very quickly, faster than O(2n). Suppose that the problem is to find all the permutations of n letters. If n=2, there are 2 permutations to find. If n=6, there are 720 permutations - far more than 2n, which is only 64.

Space efficiency of algorithm

Space used - the amount of data that the algorithm needs to keep track of at once. First example "Swapping data"

Second example "Look through the items in the list"

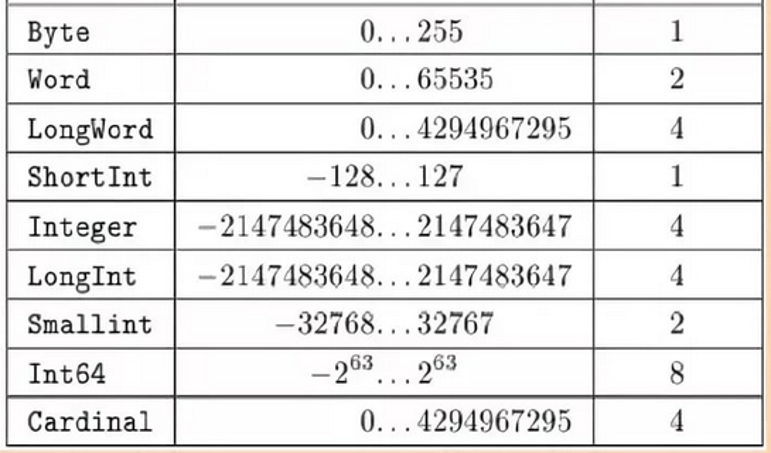

Choose data type When we use the different variables we define the data type for variables. Which data type is appropriate for the code symbol of ASCII? Why would other types of data be inefficient to use? Questions:

Exercises: Ex 1. Arrange the Big O notations from fastest to slowest. Ex 2. Algorithm complexity (Author: Litvinova Olga CS teacher of NIS Pavlodar) Ex 3. Quiz "Algorithm efficiency" (Author: Litvinova Olga CS teacher of NIS Pavlodar) Exam questions: Question. A hacker trying to discover a password starts by checking a dictionary containing 170,000 words. What is the maximum number of words he will need to try out?(Marks: )

Question. This procedure fails to find the password. He now needs to try random combinations of the letters in the password. He starts with 6-letter combinations of a-z, A-Z. Explain why the second procedure will take so much longer than the first.(Marks: ) | |||||||||||||

|

| |||||||||||||

| Просмотров: 8033 | | | |||||||||||||

| Всего комментариев: 0 | |